Clickhouse is a popular database for real time analytical queries. It is commonly used together with Kafka where the data streams from Kafka to Clickhouse and users run queries on Clickhouse.

In this article, we will connect a Kafka topic to a Clickhouse table using Upstash Kafka and Clickhouse Cloud. We will use the JDBC sink connector managed by Upstash to sync data from Kafka to Clickhouse. We will use managed services and you will have a complete pipeline without hosting any server or backend service.

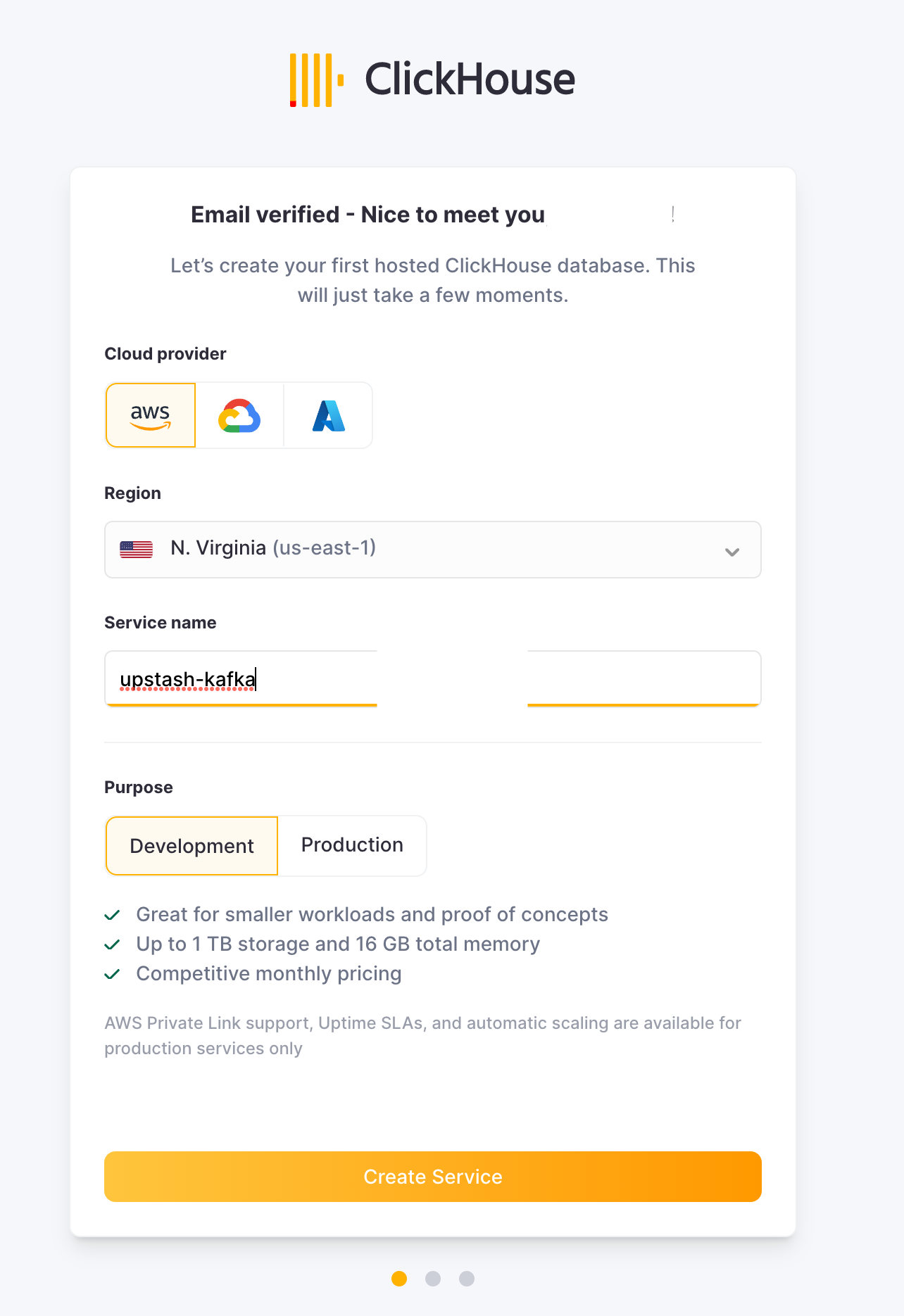

Step 1: Clickhouse Setup

You can create a managed service from Clickhouse Cloud with a 30 days free trial. Select your region and enter a name for your service. For simplicity, you can allow access to the service from anywhere. If you want to restrict to the IP addresses here is the list of Upstash addresses that needs permission:

52.48.149.7

52.213.40.91

174.129.75.41

34.195.190.4752.48.149.7

52.213.40.91

174.129.75.41

34.195.190.47

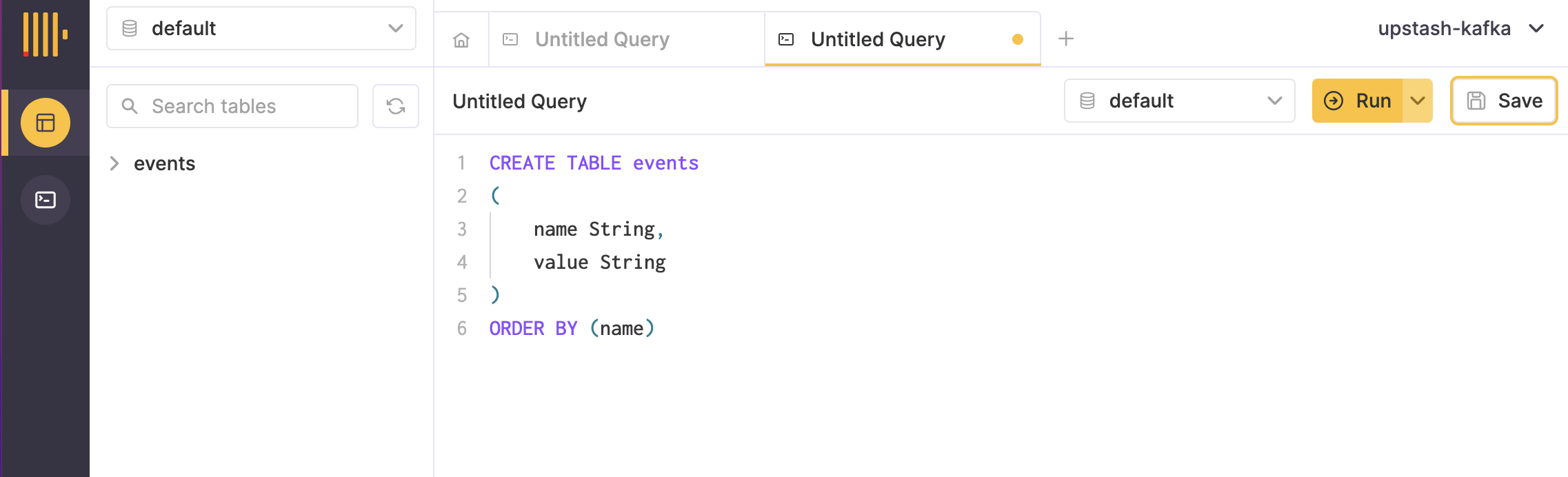

Create a table

On Clickhouse service screen click on Open SQL console. Click on + to open a new query window and run the following query to create a table:

CREATE TABLE events

(

name String,

value String

)

ORDER BY (name)

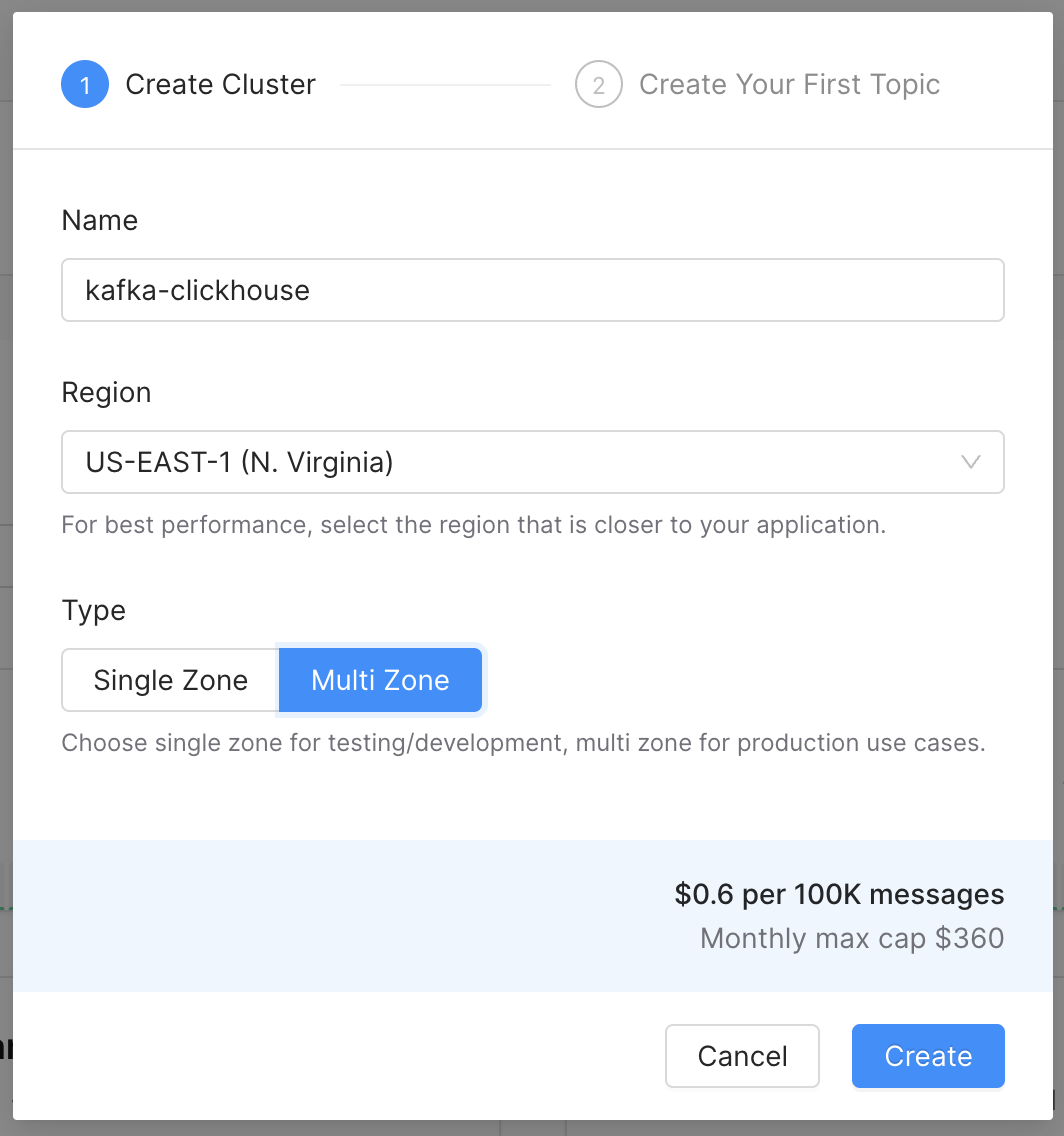

Step 2: Kafka Setup

We will create an Upstash Kafka cluster. Upstash offers serverless Kafka cluster with per message pricing. Select the same (or nearest) region with region of Clickhouse for the best performance.

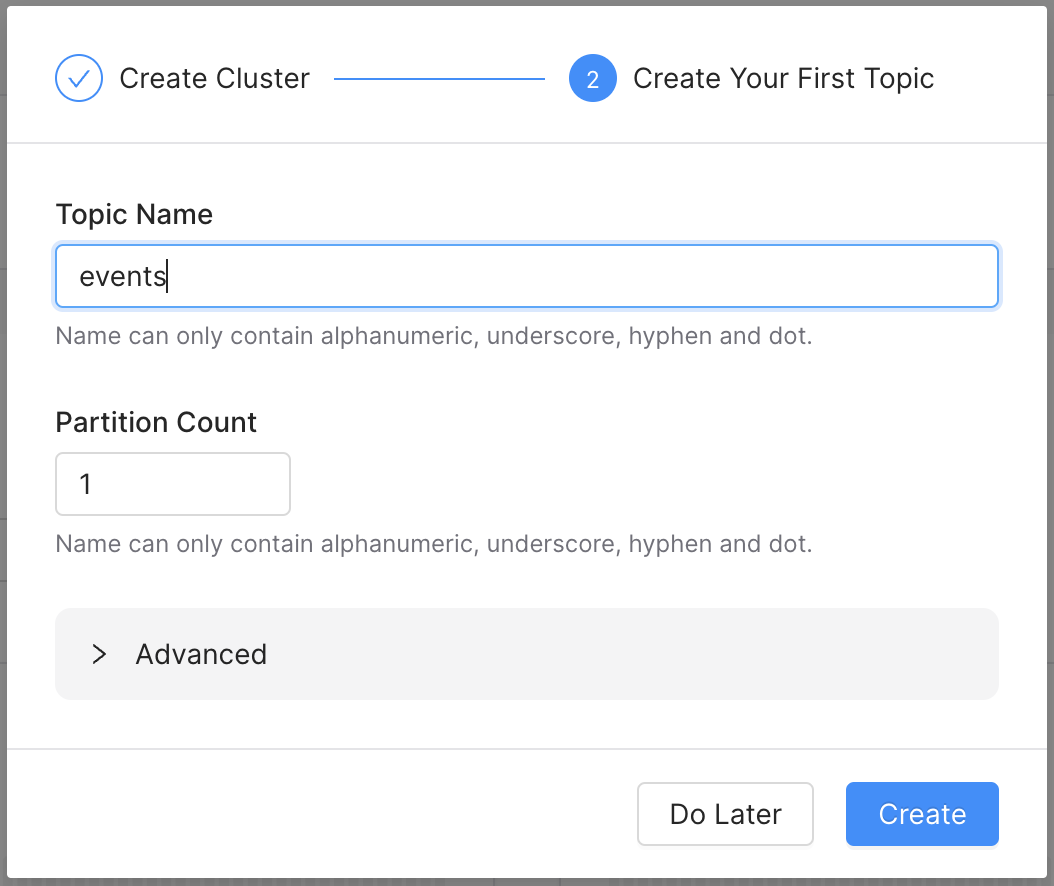

Also create a topic whose messages will be streamed to Clickhouse.

Step 3: Connector Setup

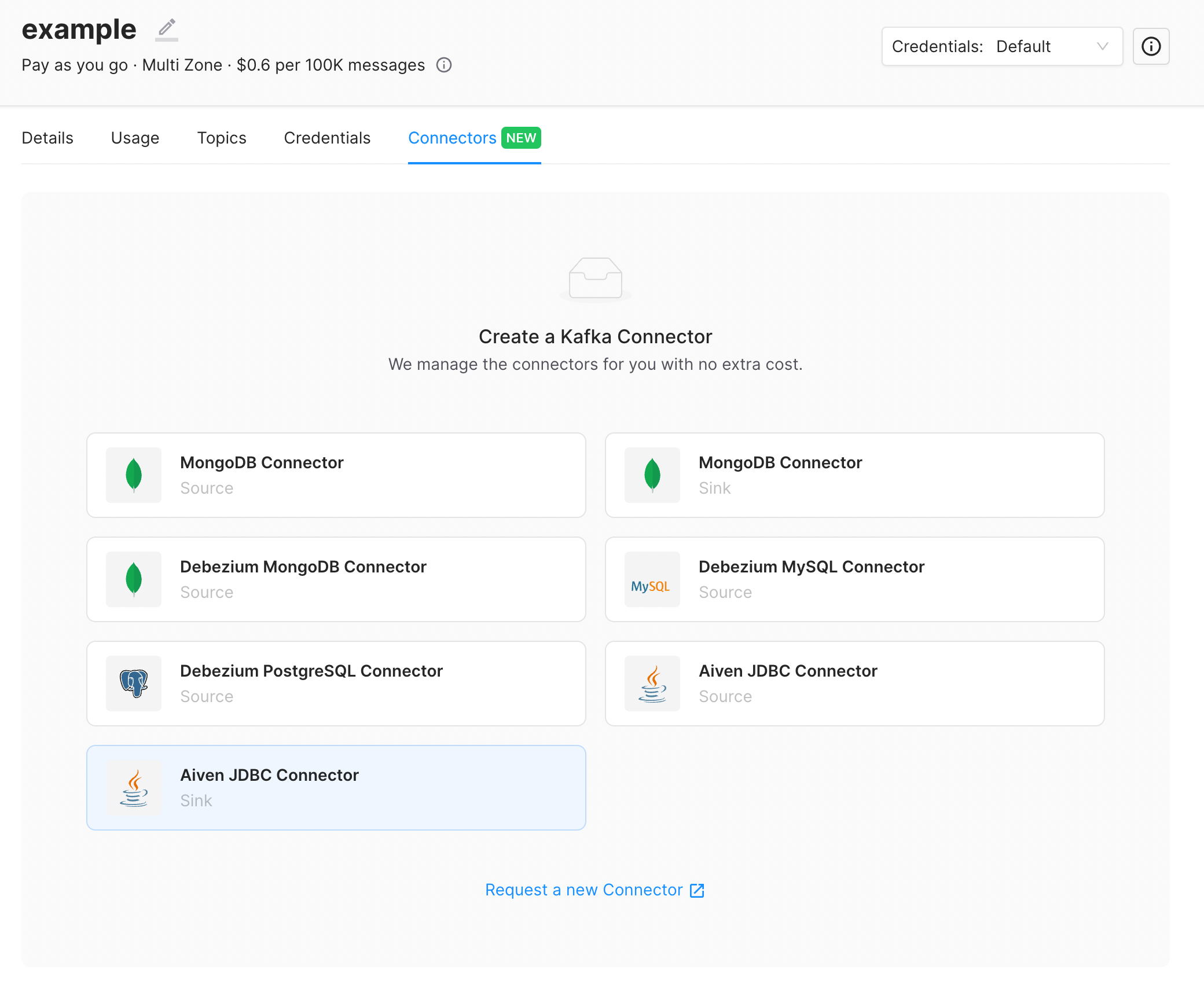

We will create a connector on Upstash console. Select your cluster and click on Connectors tab. Select Aiven JDBC Connector - Sink

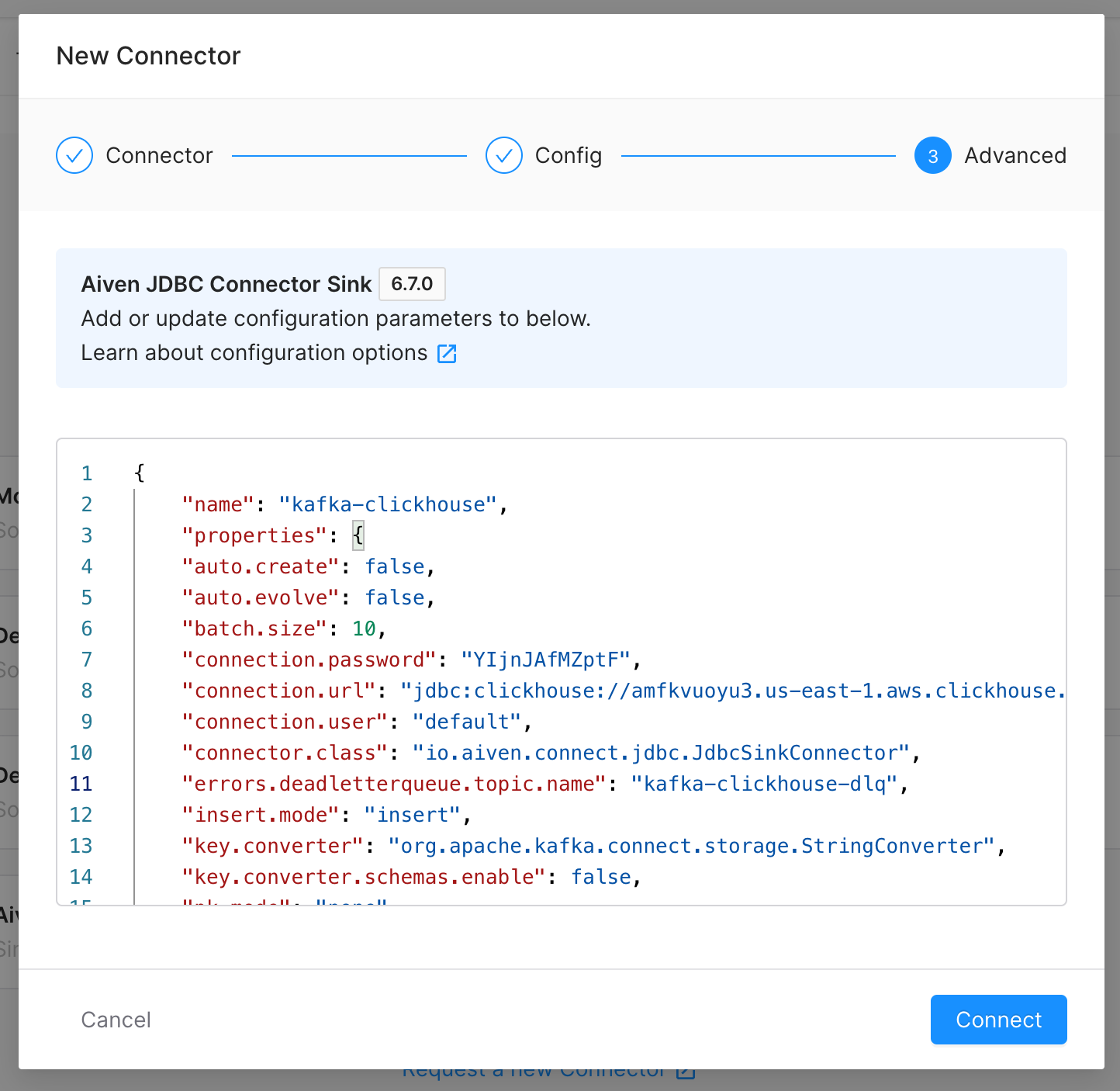

Click next to skip the Config step as we will enter the configuration manually at the third (Advanced) step.

In the third step. copy paste the below config to the text editor:

{

"name": "kafka-clickhouse",

"properties": {

"auto.create": false,

"auto.evolve": false,

"batch.size": 10,

"connection.password": "KqVQvD4HWMng",

"connection.url": "jdbc:clickhouse://a8mo654iq4e.eu-central-1.aws.clickhouse.cloud:8443/default?ssl=true",

"connection.user": "default",

"connector.class": "io.aiven.connect.jdbc.JdbcSinkConnector",

"errors.deadletterqueue.topic.name": "dlqtopic",

"insert.mode": "insert",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"pk.mode": "none",

"table.name.format": "events",

"topics": "events",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable": true

}

}{

"name": "kafka-clickhouse",

"properties": {

"auto.create": false,

"auto.evolve": false,

"batch.size": 10,

"connection.password": "KqVQvD4HWMng",

"connection.url": "jdbc:clickhouse://a8mo654iq4e.eu-central-1.aws.clickhouse.cloud:8443/default?ssl=true",

"connection.user": "default",

"connector.class": "io.aiven.connect.jdbc.JdbcSinkConnector",

"errors.deadletterqueue.topic.name": "dlqtopic",

"insert.mode": "insert",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable": false,

"pk.mode": "none",

"table.name.format": "events",

"topics": "events",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable": true

}

}

Replace the following attributes:

- "name" : Name your connector.

- "connection.password": Copy this from your Clickhouse dashboard. (

Connect>View connection string) - "connection.url": Copy this from your Clickhouse dashboard. (

Connect>View connection string) - "connection.user": Copy this from your Clickhouse dashboard. (

Connect>View connection string) - "errors.deadletterqueue.topic.name": Give a name for your dead letter topic. It will be auto created.

- "topics": Enter the name of the topic that you have created.

Note that there should be ?ssl=true as a parameter for the connection.url.

Click the Connect button to create the connector.

Step 4: Test and Run

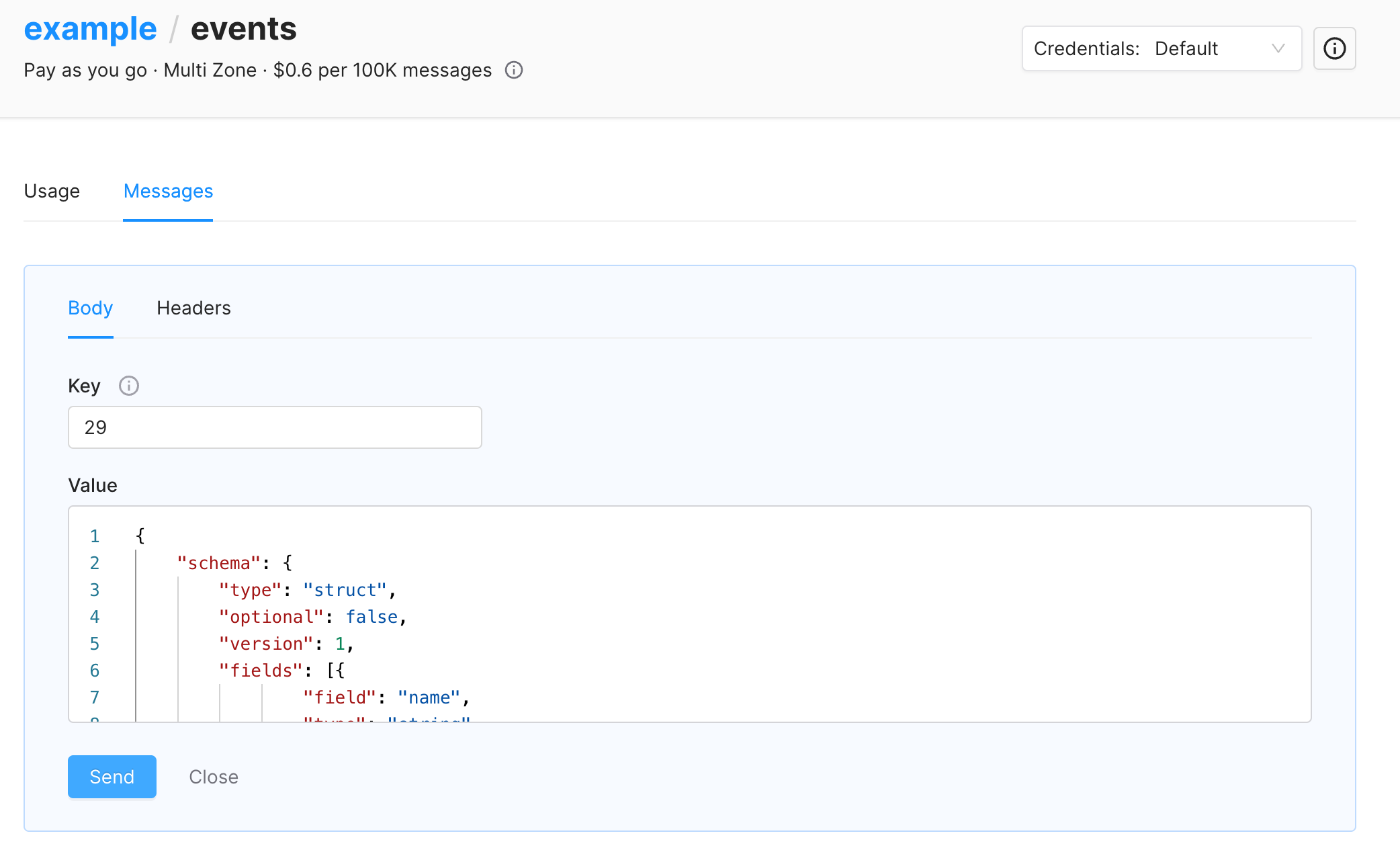

Now it is time to check if the configurations are correct. Go to your Upstash Kafka dashboard select your cluster. Click on Topics tab and select the topic that you have created at step 2. In the topic page, select the Messages tab. Enter the below json to the text area:

{

"schema": {

"type": "struct",

"optional": false,

"version": 1,

"fields": [

{

"field": "name",

"type": "string",

"optional": false

},

{

"field": "value",

"type": "string",

"optional": true

}

]

},

"payload": {

"name": "hello",

"value": "world!"

}

}{

"schema": {

"type": "struct",

"optional": false,

"version": 1,

"fields": [

{

"field": "name",

"type": "string",

"optional": false

},

{

"field": "value",

"type": "string",

"optional": true

}

]

},

"payload": {

"name": "hello",

"value": "world!"

}

}

Click on Send several times. You may update payload and Send again.

Yes, I know it is not ideal to send the schema together with payload. Schema registry is a solution. Upstash will launch managed schema registry service soon.

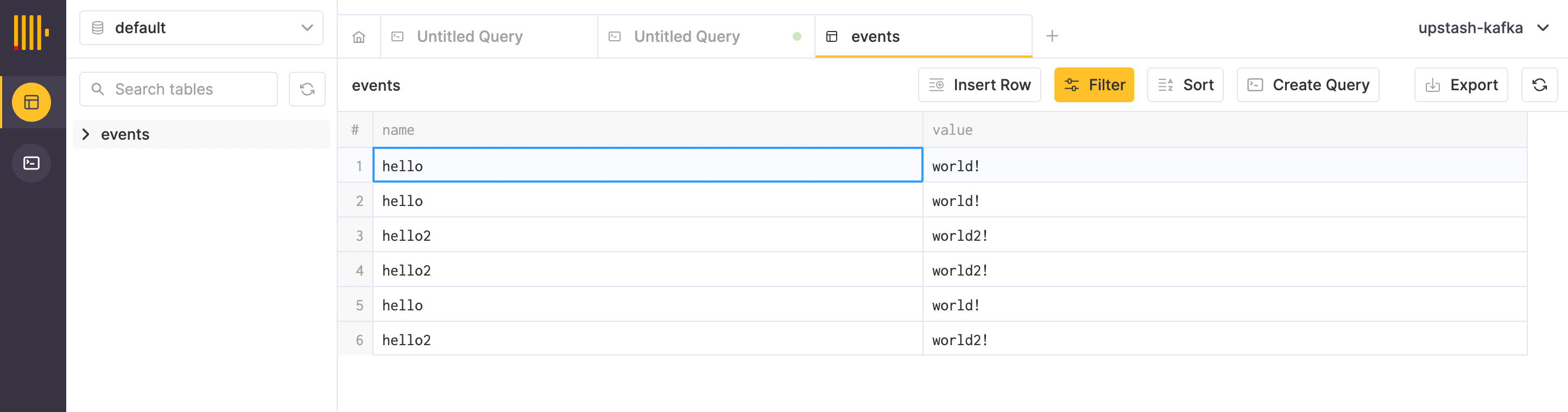

Now, go to the Clickhouse console. Connect > Open SQL console. Click on events (your table's name) on the left menu. You should see the table is populated like below: